Just Noticeable Defocus Blur Detection and Estimation

Jianping Shi

Li Xu

Jiaya Jia

The Chinese Univeristy of Hong Kong

|

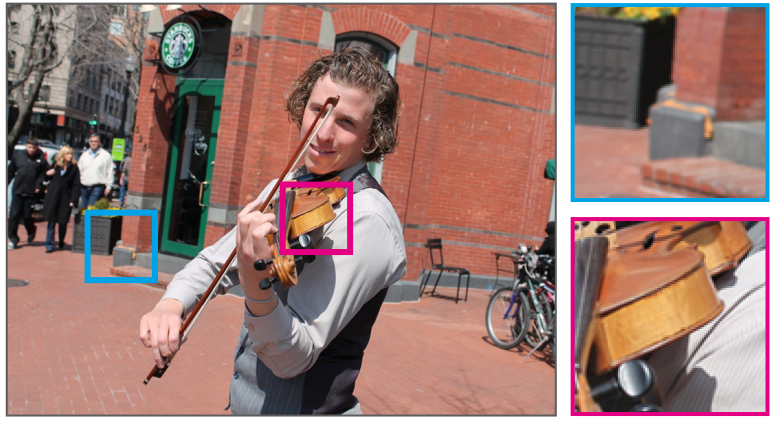

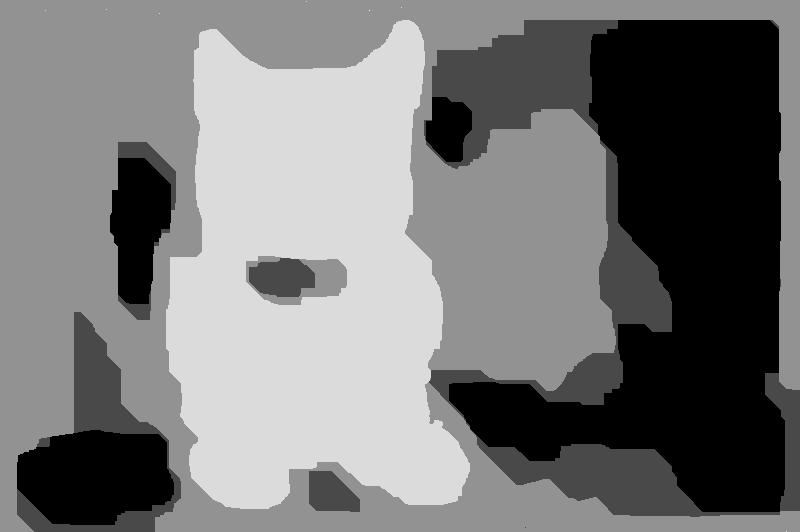

Fig. 1 The above image is downloaded from flicker.com (http://goo.gl/aijRh3) with aperture size f/5.6 and exposure time 1/500s. When a supposedly clear image is viewed in its original resolution, slight blurriness can still be noticed. It is a general phenomenon. |

Abstract

We tackle a fundamental yet challenging problem to detect and estimate just noticeable blur (JNB) caused by defocus that spans a small number of pixels in images. This type of blur is very common during photo taking. Although it is not strong, the slight edge blurriness contains informative clues related to depth. We found existing blur descriptors, based on local information, cannot distinguish this type of small blur reliably from unblurred structures. We propose a simple yet effective blur feature via sparse representation and image decomposition. It directly establishes correspondence between sparse edge representation and blur strength estimation. Extensive experiments manifest the generality and robustness of this feature.

Downloads

Results

All results of for our method are available here.

|

|

|

|

| (a) Inpug |

(d) Bae and Durand[2] |

(g) Liu et al.[19] |

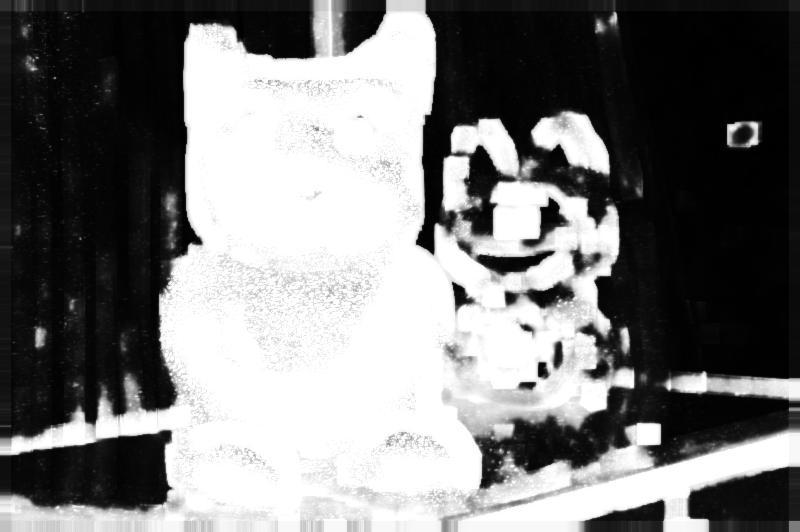

(j) Our raw feature |

|

|

|

|

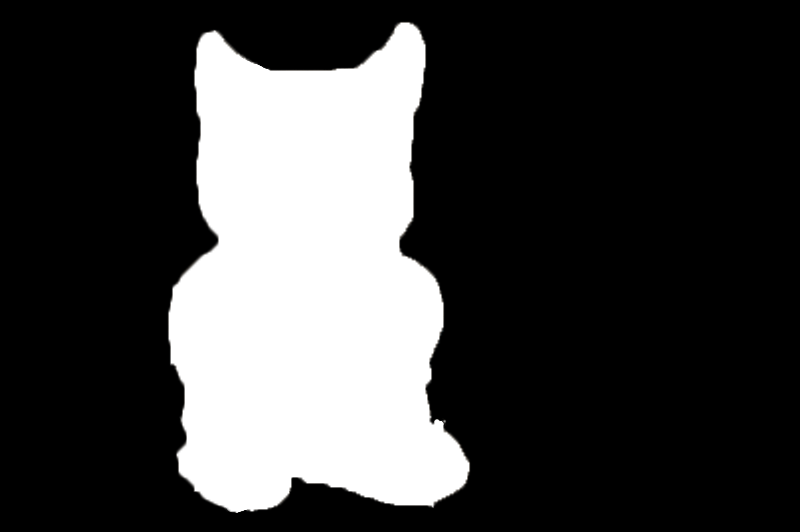

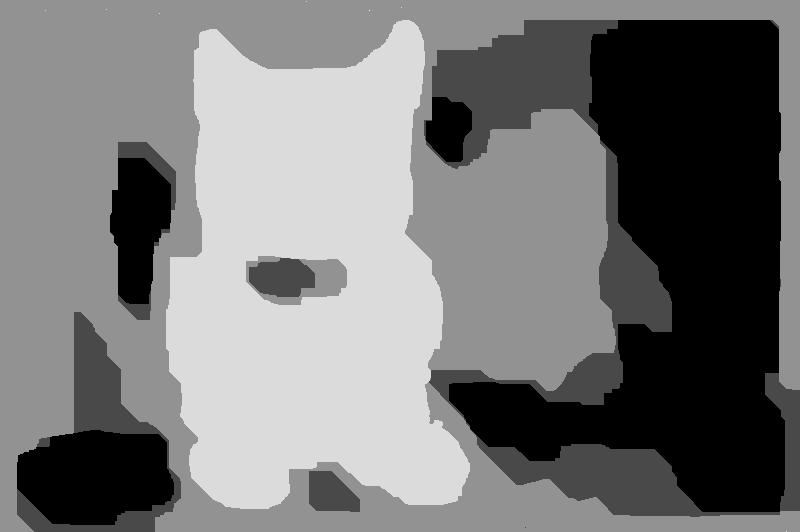

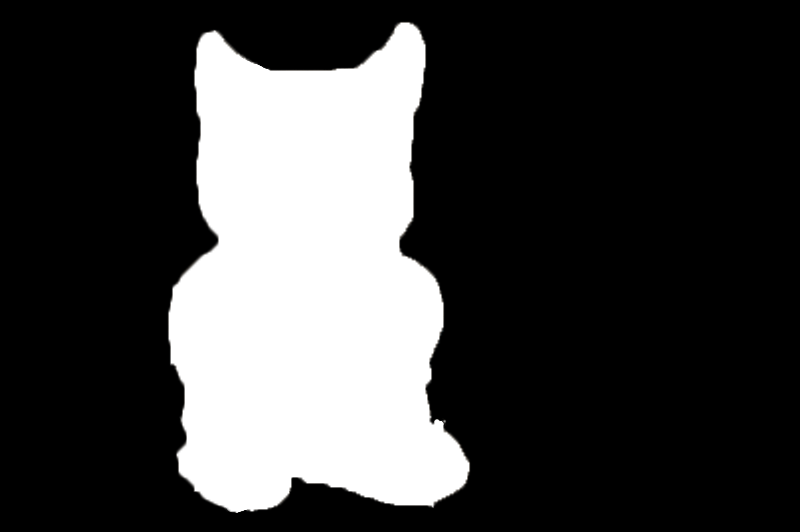

| (b) Ground truth |

(e) Zhuo and Sim[31] |

(h) Shi et al.[22] |

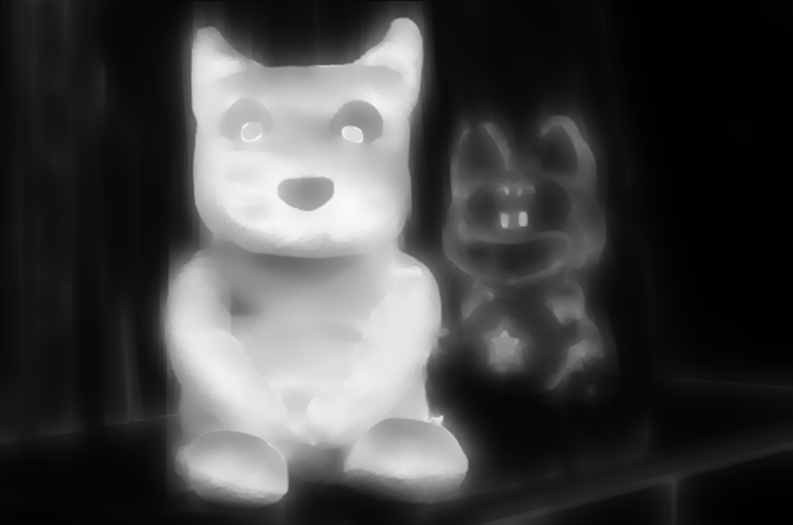

(k) Our final blur result |

|

|

|

|

| (c) Chakrabarti et al.[4] |

(f) Zhu et al.[30] |

(i) Su et al.[23] |

(l) Our binary map |

Fig. 2 Blur map comparison.

Applications

Our blur map results can avail several applications including deblurring, refocus, and depth estimation.

Deblurring

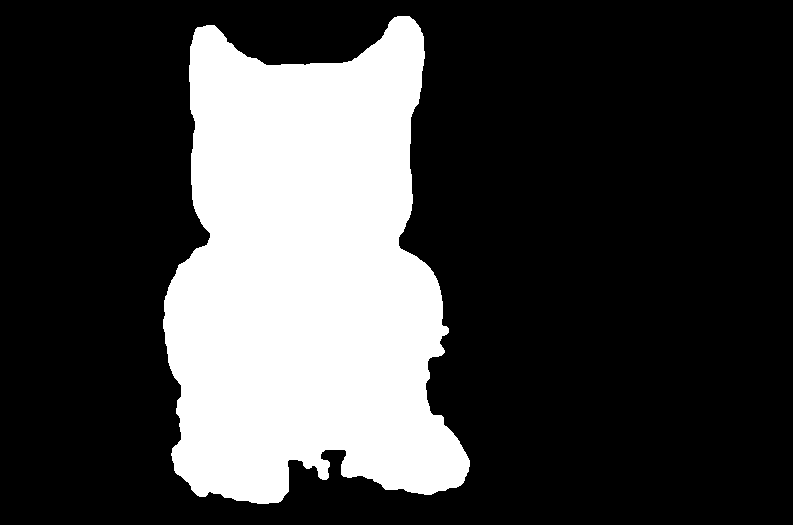

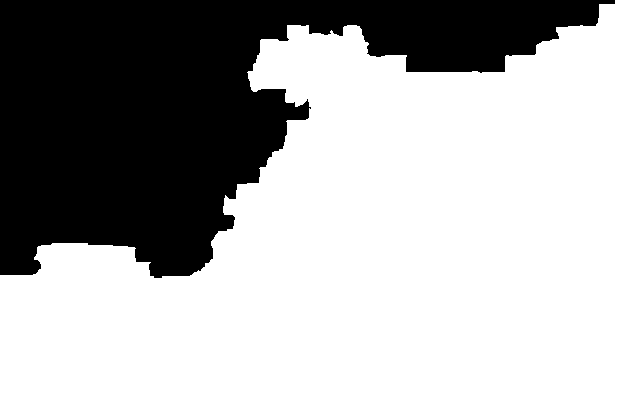

|

|

|

|

|

| Input |

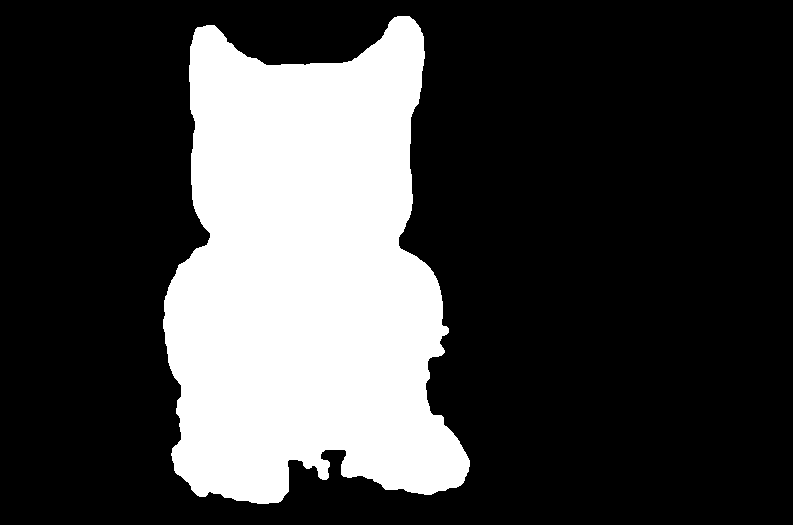

Ground Truth Mask |

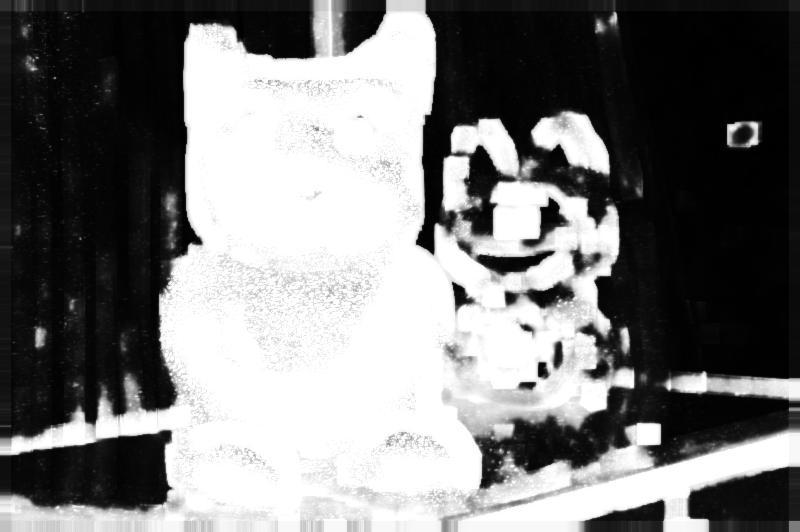

Feature Map |

Blur Mask |

Deblurring Result |

Fig. 3 Deblurring using our blur estimate.

Refocus

|

|

|

|

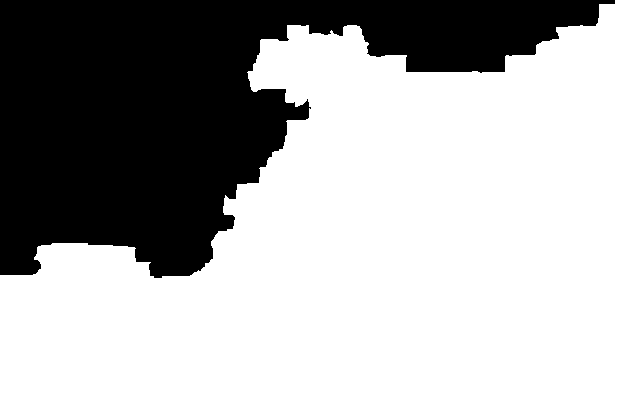

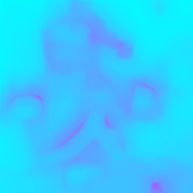

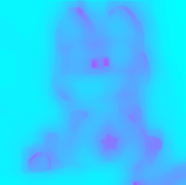

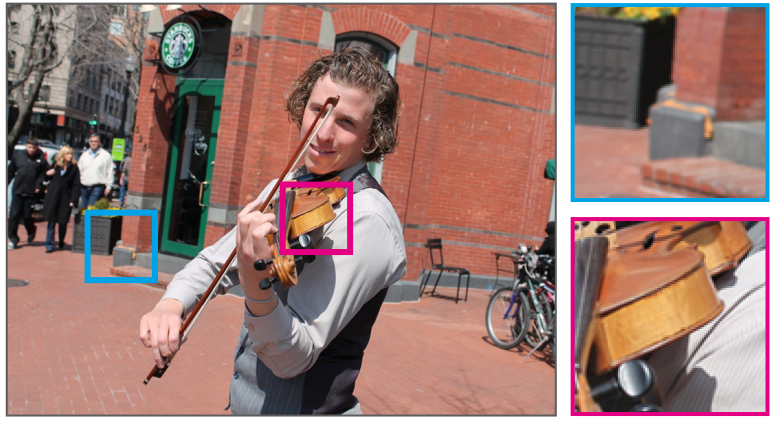

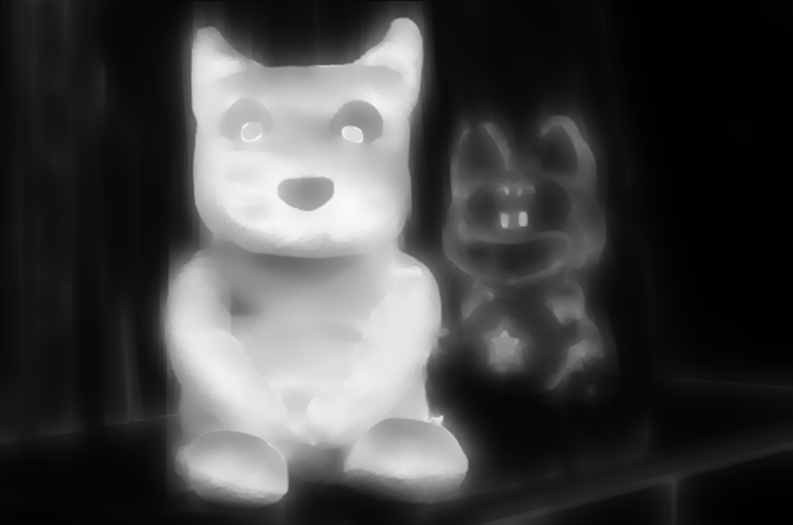

| (a) Input |

(b) Feature Map |

(c) Refocus Result |

(d) Refocus Result |

Fig. 4 Refocusing using our blur map. (a) is the input image. (b) is our learned feature map. (c) and (d) shows different refocusing result given

our blur feature map. The arrow highlights the focus point.

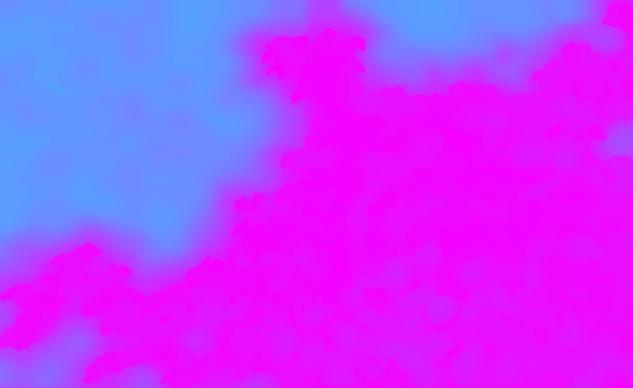

Depth Estimation

|

|

|

|

|

|

|

|

|

|

|

|

| (a) 0.5m |

(b) 0.6m |

(c) 0.7m |

(d) 0.8m |

(e) 0.9m |

(f) 1.0m |

Fig. 5 Calibrated image with their corresponding blur features. The set of images are taken using aperture size f/5.0 in different

distances.

Fig. 6 (a), (c), and (e) are three test examples with distances 0 . 8m, 0.5m, and 0.7m. (b), (d), and (f) are their corresponding features